Understanding Node and Node Pool is fundamental for anyone learning about Kubernetes. By the end of this blog I’m sure that you can get a high-level understanding of Node and Node Pool. I have added some real-life examples to make things more clear.

Node in Kubernetes:

In simple terms, a Node is essentially a virtual machine (VM) that runs your containerized applications. This Node can be a virtual or physical machine.

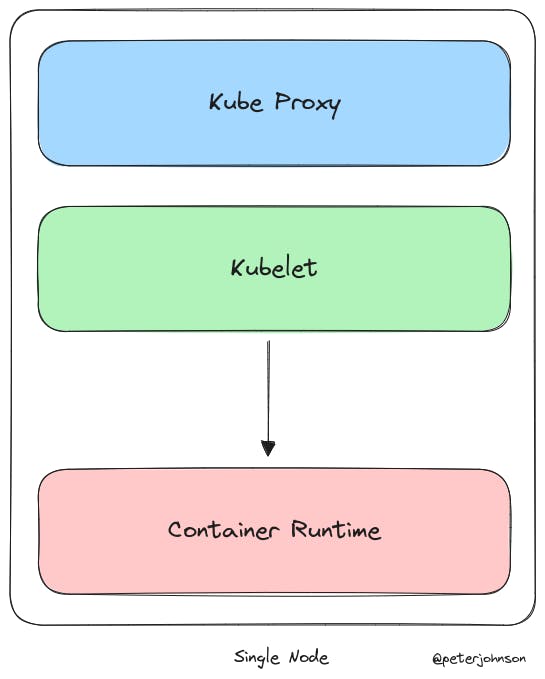

Since we are already talking about Node let’s also see what are the different components that make it up. As you can observe from the image there are mainly three components that we will be discussing here Kubelet, Kube Proxy and Container Runtime.

Kubelet:

In simple terms Kubelet is the agent that receives the instruction from the master and makes sure that those instructions are implemented in the respective node.

For Example:

The Master from the Control Plane (if you don’t know Kubernetes has a Control Plane and Worker Node- currently we are trying to understand about this Worker Node) gave an instruction like: deploy a “WebApp” on Node 1. Now the kubectl of Node 1 receives this instruction and starts orchestrating the deployment of the "WebApp" on Node 1.

Kube Proxy:

When it comes to Kube Proxy it is responsible for the communication between pods and services within the cluster.

For Example:

Suppose your web application has multiple microservices running in different pods. Kube Proxy ensures that when one microservice needs to communicate with another, the network traffic is correctly directed.

Container Runtime:

Container Runtime allows to package an application and its dependencies into a container.

Docker is one of the most widely used Container Runtime. But there are others like containerd, rocket, cri-o etc…

For Example:

Imagine you have a web application that includes a web server, a database, and other components. Docker as a runtime helps to create containers for each of these components, so that it is easy to manage and deploy the entire application.

Node Pool in Kubernetes:

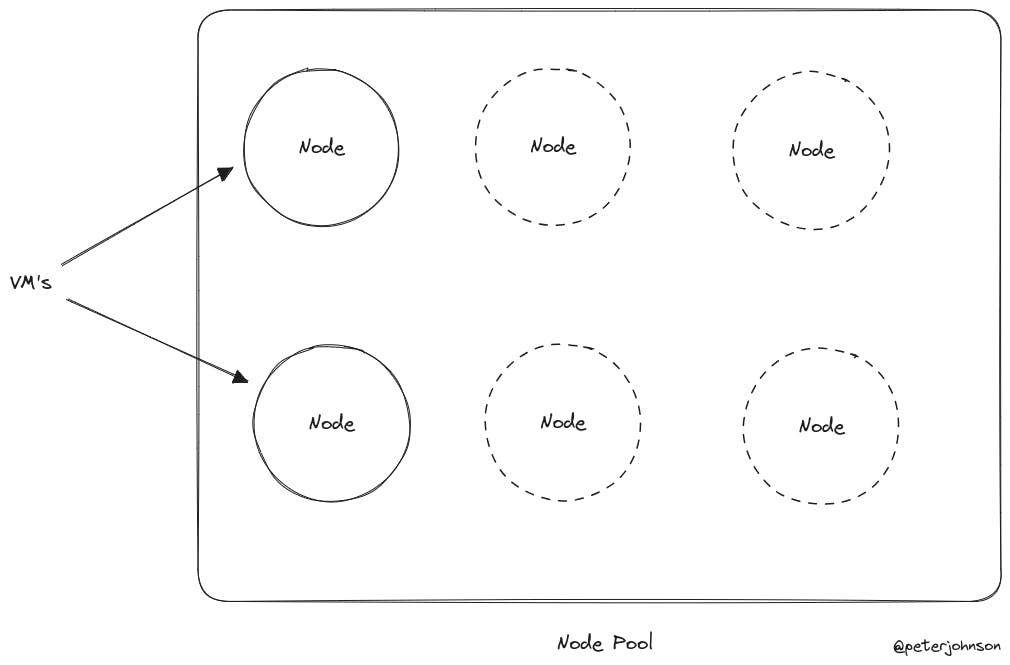

Now, think of Node Pool as a collection of these Nodes. It's essentially a group of virtual machines.

So, Node Pool is a group of nodes within a Kubernetes cluster. Node Pools are beneficial for scenarios where you want to allocate resources differently for specific workloads or stages of your application. For different stages like development, testing, and production, you can create separate agent pools with varying configurations to meet specific requirements. For instance in a production environment you can have a Node Pool with more nodes when compared to a development environment.

When you create a Kubernetes Cluster, in a platform like Azure, it automatically creates an agent pool with the specified number of nodes. For instance, if you set the node count to 1, you'll have a single VM in your pool. This might be adequate for smaller applications or for learning purposes. However, as your project progresses to different stages like staging, development, or production, you might find the need to either increase the number of nodes in your existing pool or create separate agent pools for each stage, based on specific requirements.

Example:

Consider an example where you're deploying an E-commerce web application into a Kubernetes cluster with 2 Node Pools-Development and Production. The Development pool consists of a simple setup with, let's say, 2 Nodes. You deployed your application and things are all fine. Now coming into the production pool you need to make sure that your application is highly available hence you increment the nodes in the production pool to 4. As your application gains traction and experiences varying traffic demands, you have the flexibility to dynamically add more Nodes.

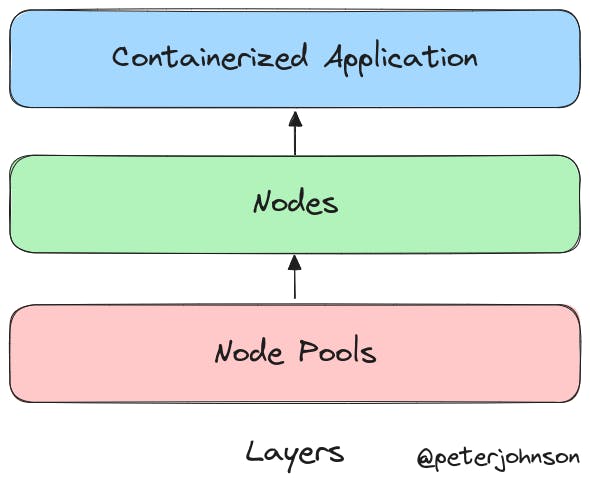

Layers:

Here I tried to depict the way different components are arranged. The base is Node Pools which holds ‘n’ number of Nodes as configured by the user and these Nodes are responsible for running the containerized application.

To sum it up, in Kubernetes, think of Nodes as the computers that run your apps, and Node Pools as groups of these computers. So, if you're deploying an app, you can start with a couple of Nodes, and as your app grows, you can easily add more Nodes to handle the increased traffic. This flexibility makes it simpler to manage and scale your applications in Kubernetes.